"It's not the fast trends that have already happened. It's the fact they will be even faster tomorrow!" - Futurist Jim Carroll

Most people don't understand exponentiality.

They should, because most are living within it, and it defines their future - right now, particularly with the growth trends of AI. Exponentiality? I asked ChatGPT for an explanation that might help you comprehend it:

Exponential growth can be simply understood as a snowball effect, where something starts off small but grows faster and faster as time goes on. To explain this concept in the context of AI, let's use a few analogies:

Doubling Penny: Imagine you have a penny, and every day you double the amount you have. On the first day, you have 1 penny, on the second day you have 2, on the third day you have 4, and so on. By the end of a month, you'll have over 10 million dollars. In this analogy, the penny represents the power or capability of AI, which seems to be doubling in a short amount of time.

Photocopying a Photocopy: Imagine you make a photocopy of a picture. Then, you take that copy and make a copy of it, and then copy that copy, and so on. The first few copies might not show much difference, but after several iterations, the changes become very apparent and you may end up with an image that's barely recognizable or vastly more detailed. This represents how AI, through rapid iterations and improvements, can evolve swiftly into something much more advanced.

The Lily Pad Pond: Consider a pond with lily pads. Let's say the number of lily pads doubles every day: one lily pad on day 1, two on day 2, four on day 3, and so on. If it takes 30 days for the pond to be completely covered with lily pads, on day 29 it would only be half covered. This analogy helps to illustrate how exponential growth can lead to sudden and dramatic changes. In terms of AI, advancements might seem slow at first, but there will be a point where we see an explosion of capabilities.

That's what's happening with AI right now. For a series of upcoming keynotes in Boston, Palm Springs, and Hawaii, I'm using these slides to put things into perspective.

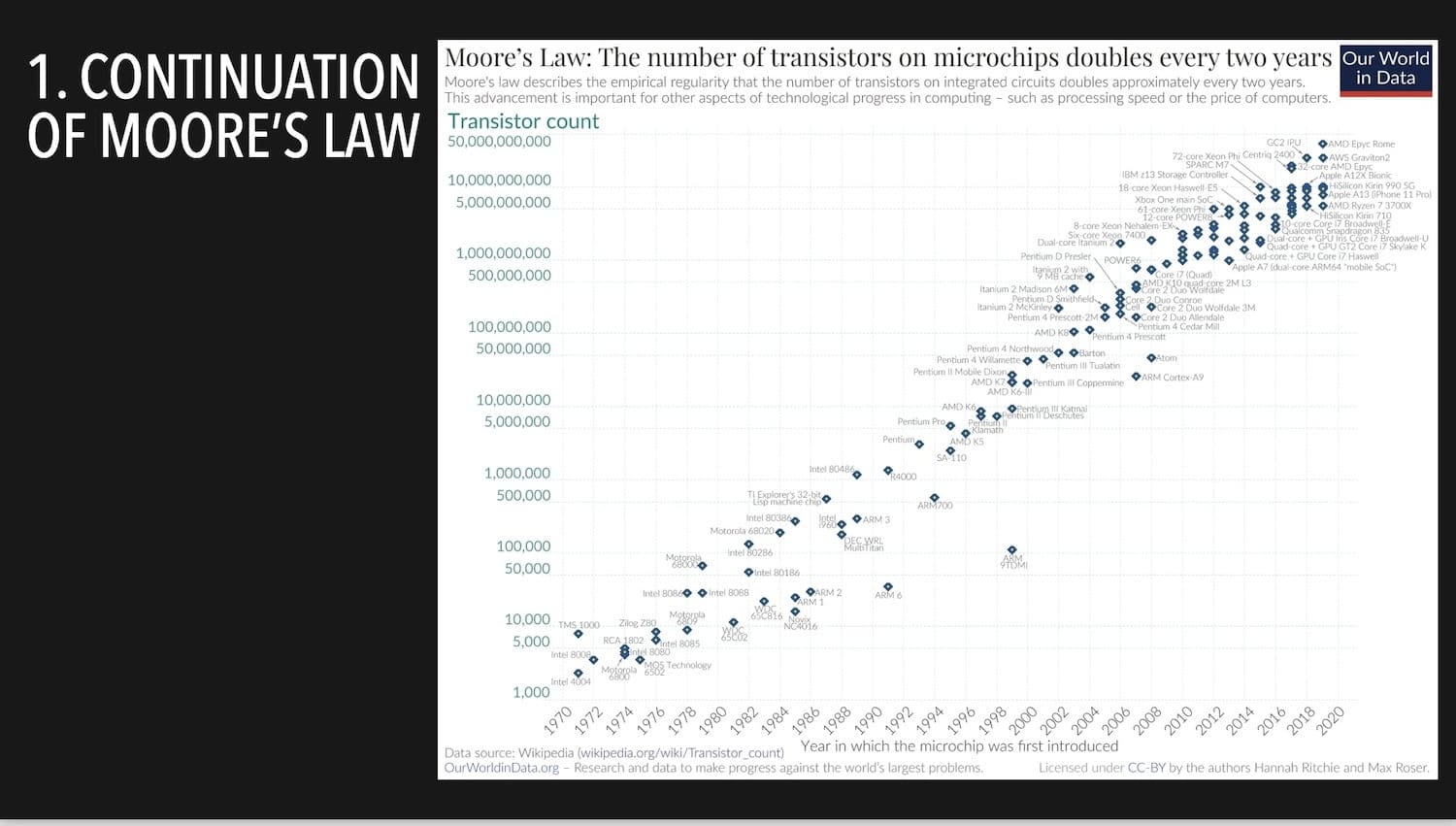

First, a continuation of Moore's Law - the processing power of a computing chip doubling every 18 months - means that raw computing power continues to increase at an exponential rate:

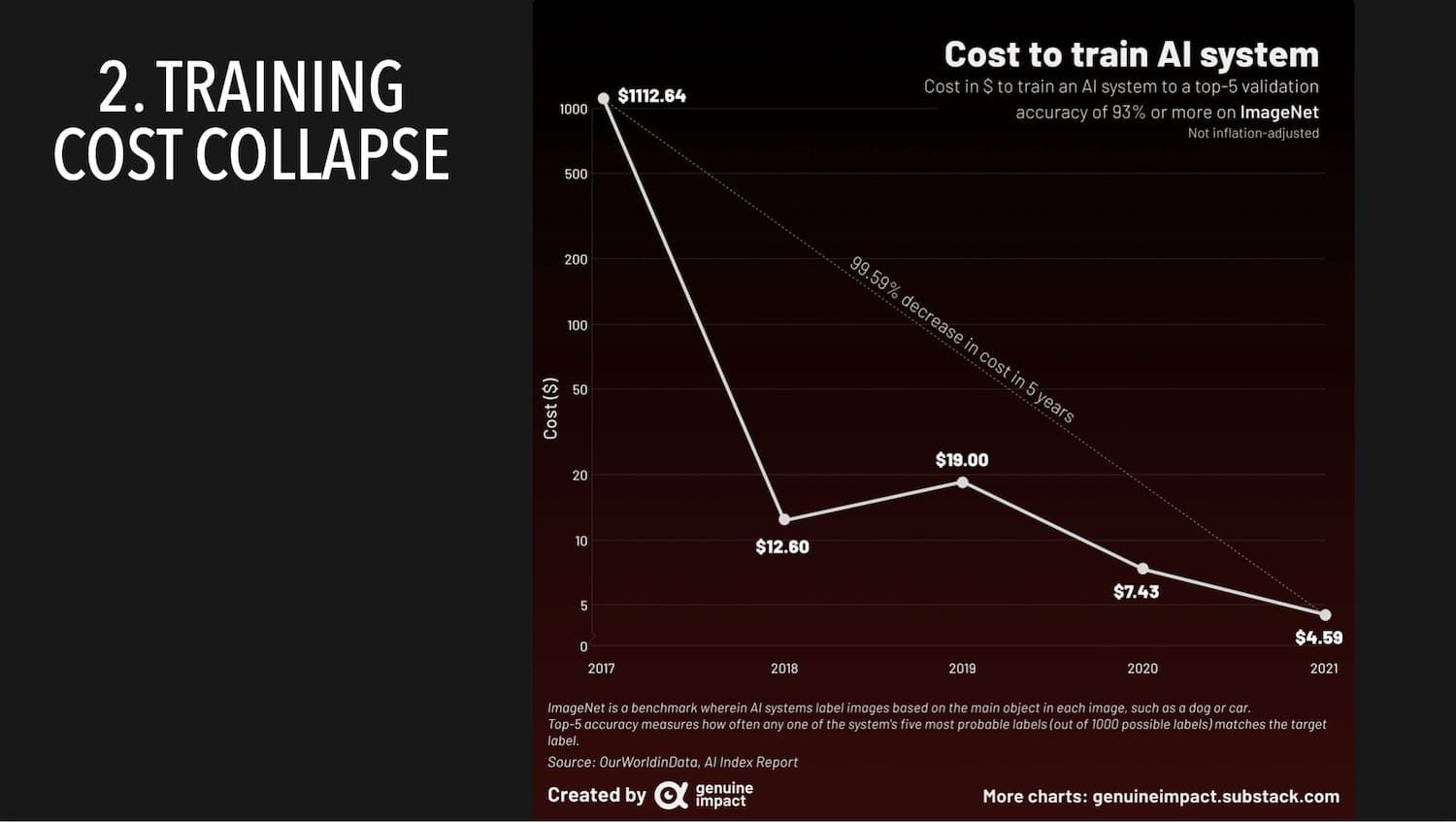

Second, the cost to 'train' these AI systems is collapsing:

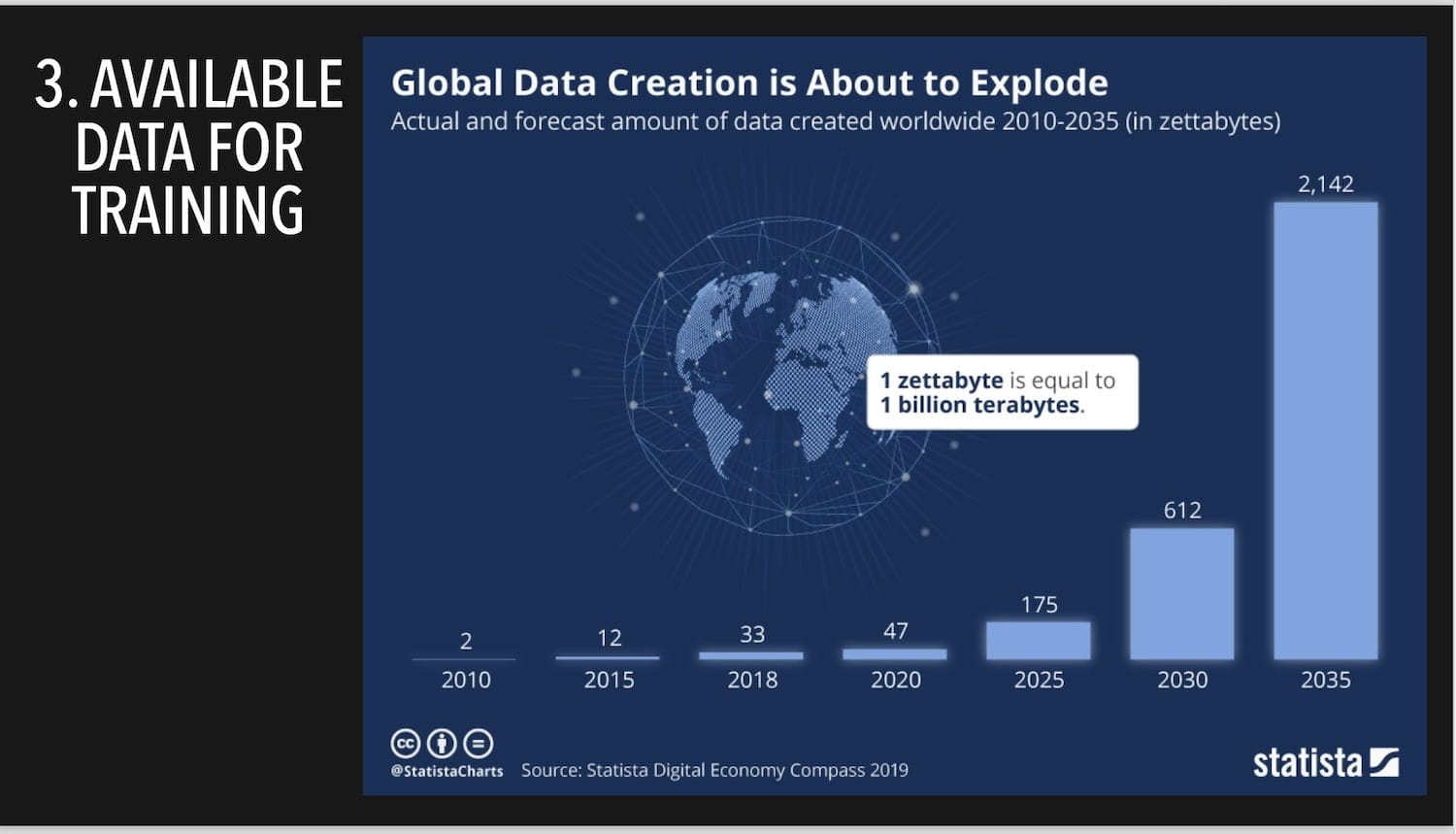

Third, we are in the midst of an absolute explosion in the availability of the data that can be used to 'train' AI systems:

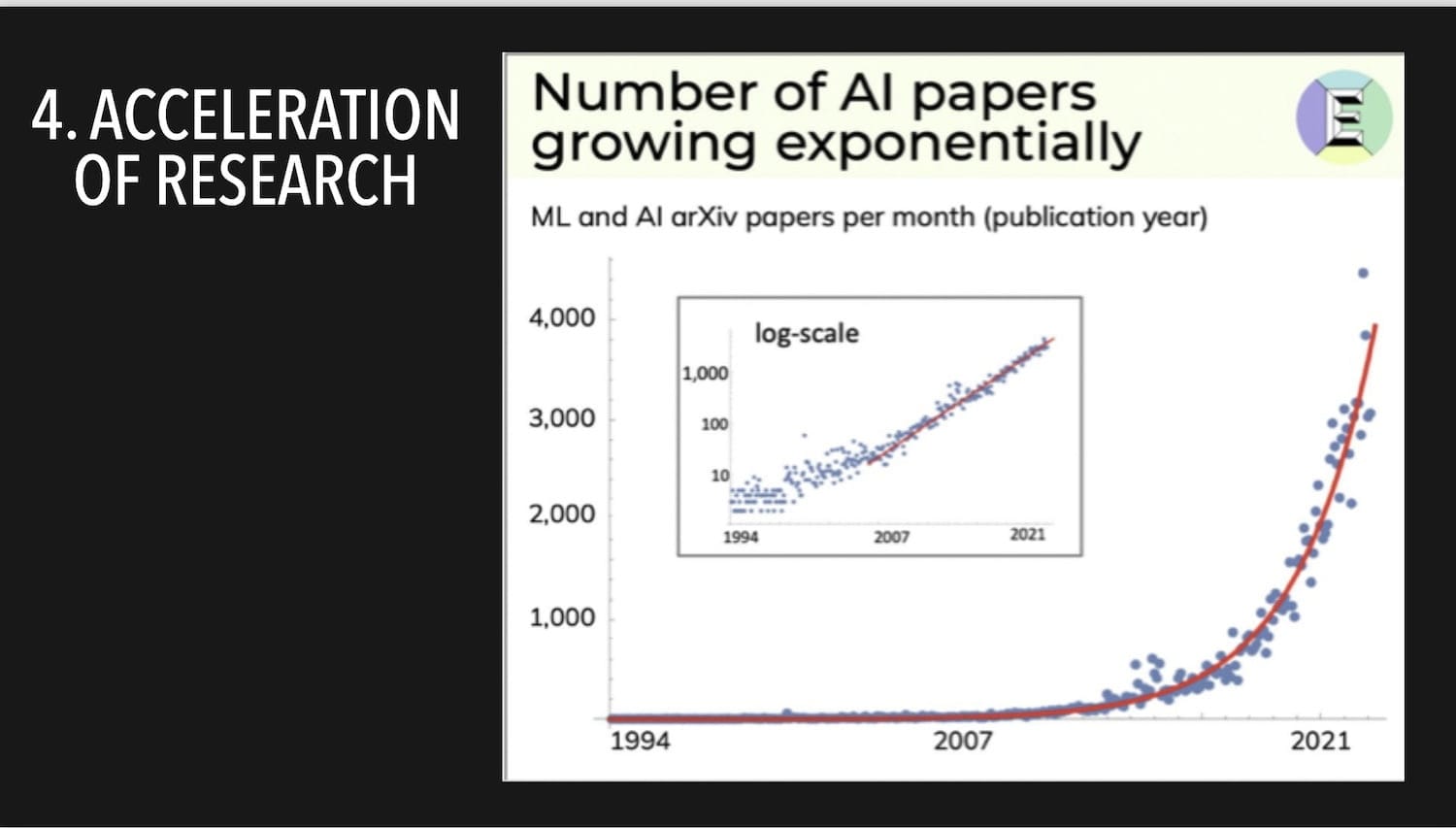

Fourth, we have seen a massive increase in the research undertaken into AI.

These trends are, in and of themselves, significant. But it's not what has happened so far that matters - it's how these trends continue to accelerate into the future.

To put that into perspective, I share this screenshot:

The numbers are staggering:

As The Sun's article points out, GPT-4 may buck the trend for every larger and larger models. Back in 1998 Yann LeCun's breakthrough neural network, LeNet, sported 60,000 parameters (a measure of the complexity of the neural machine to do useful things). Twenty years later, OpenAl's first version of GPT had 110 million parameters. GPT-2 has 1.75bn and GPT-3, now two years old, has 175 billion. More parameters mean better results. State of the art multi-modal networks, which can go from text-to-image to text-to-text or other combinations, are even more complex. The biggest are approaching 10 trillion parameters.

Think about that - we are going from AI systems that used 60,000 parameters -0 to 110 million, 1.75 billion, 175 billion - but soon, 10 trillion.

You don't need to understand how AI works to understand its impact. You don't need to understand "AI parameters" to understand that this is an absolutely massive growth rate - meaning that what is yet to come is going to be even bigger, faster, and more far-reaching than what we've seen so far. The models will become more sophisticated, more powerful, and more far-reaching.

Buckle up - it's going to be a ride!

Futurist Jim Carroll interprets the trends of the future so you don't have to!

Thank you for reading Jim Carroll's Daily Inspiration. This post is public so feel free to share it.