A photo from my keynote earlier in the week for the Controlled Environment Building Alliance in Palm Springs, where I'm speaking to the issue of the acceleration of AI.

Most of us were surprised and stunned by the sudden arrival of large language model technologies like ChatGPT, Midjourney, and Stable Diffusion. The fact that we could ask questions of technology and get reasonably intelligent answers, or instruct a computer to draw an image and it came back with magical results in an instant - we've come to believe that we are living in an era of magic. And, we are.

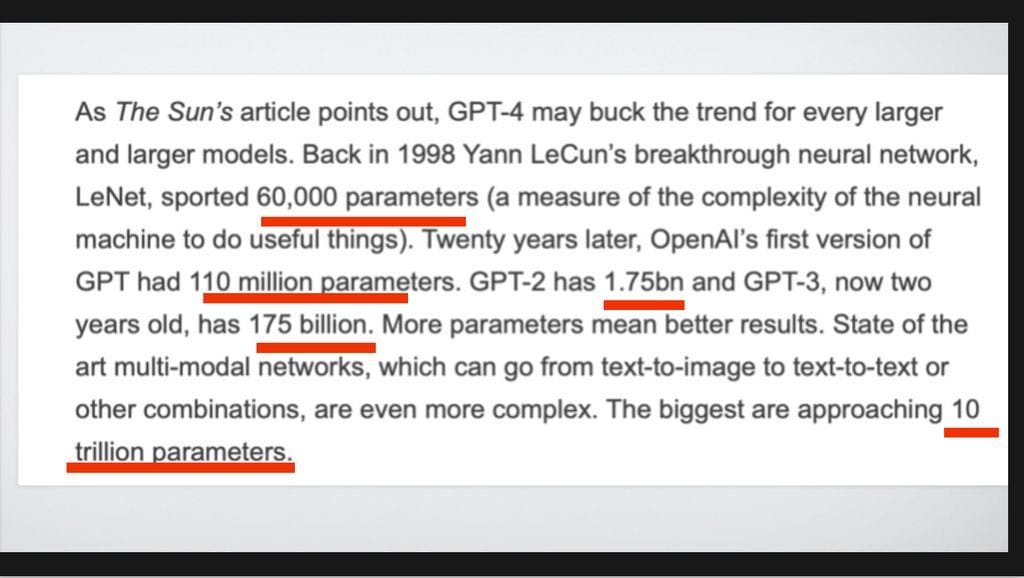

And yet, there is so much more yet to come, because things are speeding up to a rather ridiculous degree. The screenshot I used during my keynote puts things into perspective:

The numbers put into perspective the trend that is underway: A large language model in 1998 was based on but 60,000 parameters. OpenAI's GPT-1 had 110 million parameters, and that grew to 1.75 billion parameters for GPT-2. And yet, the next version, GPT-3, was based on 175 billion parameters ... and GPT4, 1.75 trillion. What's next? Models that will approach 10 trillion parameters or more.

I don't know about you, but when I see exponential growth like this with any trends, I know that something significant is happening.

Over at AssemblyAI, there's a good article giving this trend a bit more perspective:

GPT-4, one of the largest models, is estimated to possess around 1.8 trillion parameters — 10x more than its predecessor, GPT-3. And this trend is set to continue its blistering pace. According to forecasts by Mustafa Suleyman, CEO of InflectionAI and co-founder of DeepMind, models will be 10x larger than GPT-4 within a year, and we’ll see a hundredfold increase within the next 2-3 years. These predictions underscore the remarkable speed at which the field progresses.

Why Language Models Became Large Language Models And The Hurdles In Developing LLM-based Applications

The challenge is that this type of growth might not continue without access to a lot more data that can be 'ingested' by a model. That's where the growth of knowledge comes in - I often like to point out that prior to the global pandemic, the volume of medical knowledge was doubling every year - but with the acceleration of medical science, it's now doubling every 78 days or less. There's more information to feed the beast.

That's why when it comes to understanding a trend, it's not the trend that has occurred in the past that matters - it's the trend going forward. And this trend - AI - has momentum!

Futurist Jim Carroll knows that it's the exponential trends that really need to be watched.