"It's the types of crime that we can't yet imagine that we should be worried about!" - Futurist Jim Carroll

Later today, I'll be doing a talk for the senior leadership team of the RCMP - that's the Royal Canadian Mounted Police - on the future impact of AI.

I'll have a few hundred police and civilian officials in the room and will take a pretty broad but intense look into the future. I'll be covering both the opportunity for the use of AI for crimefighting and public safety responsibilities, but will also take a look at the fact that the acceleration of AI leads to an accelerated risk of unknown crimes yet to be committed based on technologies that don’t yet exist!

Another way to put a spin on this? It’s the risk of unknown crimes yet to be committed based on technologies that don’t yet exist! That’s the future of policing!

(And yes, I've had a little fun with AI in this post. Look carefully, and that's me in uniform!)

The story of AI in policing is a complex one, involving many new opportunities and new skills to battle comprehensive new criminal risks - and one that is wrapped up in a lot of controversy when it comes to privacy and constitutional rights. And there is no doubt that these issues are going to become even more complex as things speed up.

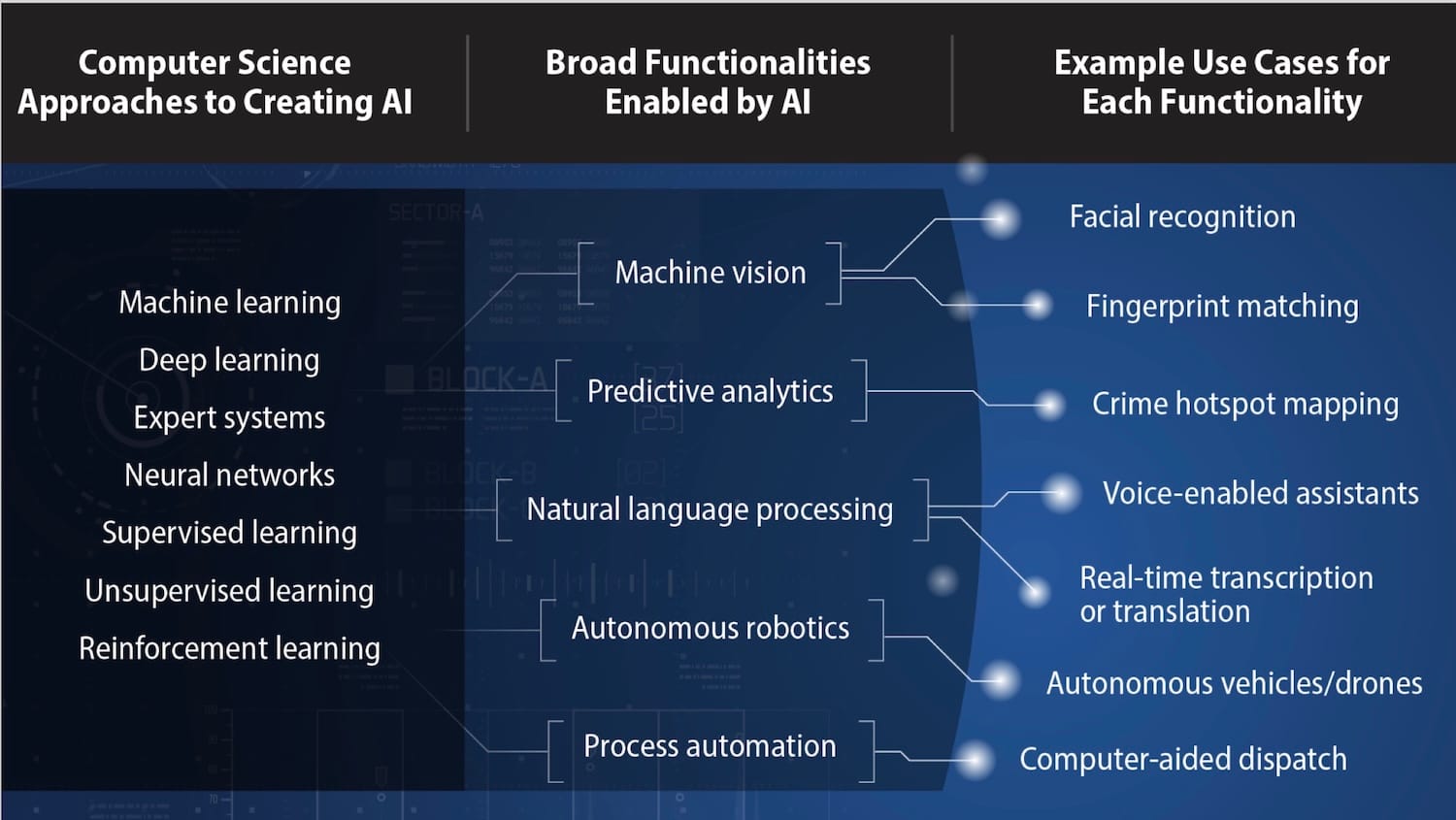

Let's start here. AI isn't necessarily new to the policing world - various police forces have already been using various forms of AI for quite some time. For example, just this August, Sentry AI’s “digital coworker” Sentry Companion was used to help arrest 12 suspects in a mail and package theft ring in Santa Clara. The software was used to analyze security camera footage, looking for defined "suspicious activity" - utilizing 'machine vision,' an aspect of AI that has been with us for quite some time and is already quite mature. There are countless other examples, including controversial programs in Canada, Britain, and elsewhere.

“Predictive policing” is also a very real trend - just as Spotify might recommend various songs on your musical preferences, or Amazon offers up products based on your shopping habits, predictive policing uses an algorithm to try to prevent crimes before they happen, based on algorithmic analysis of video or other sources. There is also the field of OSINT, or 'open source intelligence', where police forces use analysis of the Web or social media channels to find existing and new, emerging threats. All of this comes with some degree of controversy, of course, with a lot of debate, as both government legislators, ombudsmen, and public watchdog groups argue strongly against the privacy implications that come with this new era of intelligent technology, particularly video and image surveillance.

Even so, some “intelligence” doesn’t use actual AI. For quite some time, a few police forces have been using what are known as “super-recognizers” - police officers who have an exceptional ability to remember and identify faces. These individuals can go through massive amounts of security camera footage to identify suspects - and were used in 2011, for example. A series of riots in the UK. Even so, artificial intelligence and facial recognition software are seen by some as the future of crime-fighting around the world.

But what about the future? From a crime perspective, it’s pretty bleak. There is no doubt that there will be a significant number of AI-driven crimes, wherein criminals will create deepfakes for purposes of extortion, algorithms that can hack into computer systems to commit financial crimes, or algorithms that can analyze and manipulate financial data to influence or cripple financial markets. And there is absolutely no doubt that there will be an increase in AI-related cybercrime. As more and more aspects of our lives move online, we’ll see cyber fraud, hacking, data theft, AI-managed identity theft, and much more. One estimate suggests that by 2033, 80% of all crimes will be cybercrimes. The big problem here? Perpetrators are often hidden behind anonymity and evidence is largely digital, and AI will only accelerate that opportunity.

Then there is the crazy Matrix-like science fiction crime of the future that will involve various aspects of AI - the hijacking for terrorist purposes of autonomous vehicles or drones, the hacking of brain-to-computer interface technology, or digital currency theft (which is already occurring.) Remember my favorite phrase that starts "Companies that do not yet exist will build products not yet conceived.....?" The logical extension of that is that "nefarious elements will commit crimes not yet imagined using concepts not yet in existence with tools not yet in existence," or something like that.

What other issues might the RCMP be faced with? The list is vast. There are fast-emerging new digital identity crimes and synthetic personas - RCMP officers may need to unravel networks of AI-generated identities used for fraud, espionage, and misinformation campaigns. They may work with AI-driven analytics to identify patterns, trace digital fingerprints, and verify the authenticity of identities in cyberspace, which could become critical in fields from finance to national security. There is also a heightened risk of cyberattacks on critical infrastructure in Canada - everything from smart grids to autonomous transportation, which makes it a prime target for cybercriminals and state-sponsored attackers. The RCMP could play a critical role in securing vital national assets by detecting, investigating, and mitigating cyberattacks on healthcare, energy, and transportation networks, possibly using predictive AI to preemptively identify threats.

We also need to consider genetic data and crime - with genetic data increasingly stored online and used for medical, legal, and employment purposes, the RCMP could find itself managing complex crime cases involving the theft or misuse of this sensitive information. They may need to protect against bio-crimes that manipulate or weaponize genetic data, as well as address crimes where unauthorized access to genetic profiles leads to discrimination or exploitation.

What about AI-driven fraud and deepfake evidence? The RCMP will likely encounter AI-driven fraud where individuals are impersonated using deepfakes or audio manipulation, affecting cases from fraud to defamation. They’ll need tools that can authenticate real versus synthetic media, which will be critical in maintaining trust in digital evidence. The ability to verify the authenticity of video and audio recordings may become a core competency in future investigations. (This issue is of particular interest to me, as I was an expert witness back in 2003 in a Federal Court case as to the admissibility of the Internet as an evidentiary tool in a trial. Now imagine the impact of AI!)

In addition to these technological challenges, the RCMP will likely need to invest in training that combines traditional investigative skills with expertise in digital forensics, AI ethics, quantum computing, and cyber-psychology. Keeping the balance between maintaining public safety and respecting privacy and civil liberties will be paramount as the nature of policing evolves!

Here's the bottom line - as AI develops at a much faster speed with new technologies and ideas coming at us almost daily, criminals will likely find new and creative ways to use it to commit crimes - and that means that police forces must develop the skills, knowledge, and advanced capabilities to keep up. Police will need training on new investigative techniques and technologies involving AI - such the the use of advanced forensic software and tools that can collect and analyze digital evidence, and AI-powered video analytics tools that can detect anomalies and predict emerging threats. I've got a chart in my slide deck that walks through the nature of the opportunities.

Like any industry, career, and profession, the world of crime-fighting and public safety is in the midst of a massive change as a result of the acceleration of AI. It's not necessarily new to them - but the speed at which new issues, challenges, and opportunities are coming about is rather staggering.

Twenty years ago, Futurist Jim Carroll spoke to a conference of police chiefs and predicted the emergence of bodycams, noting that it could both work for them and against them.